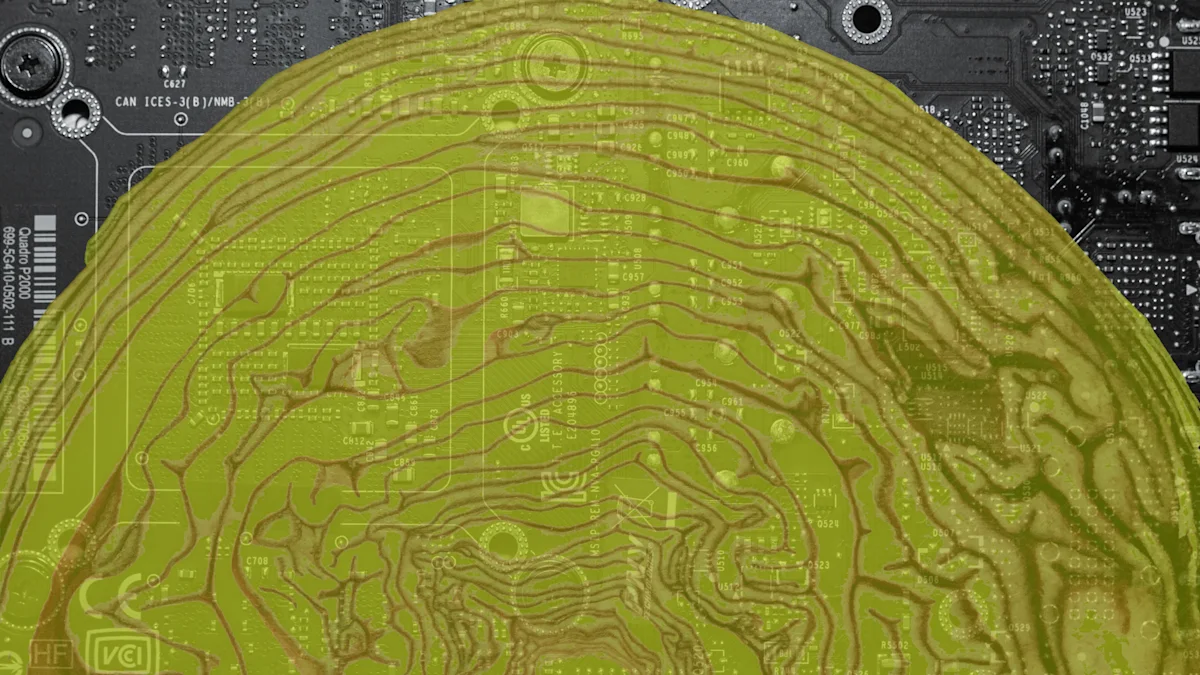

"Ogres are like onions. Onions have layers. Ogres have layers." - Shrek

The same should be true for AI tools.

Layering can be one of the most effective techniques in software design. Think of how we separate concerns in a web app: the UI handles interaction, the controller manages logic, and the database does storage. Or how relational databases separate the logical schema, query planner, and storage engine, each focused on a different layer of abstraction. These layers keep things modular and manageable. But we seem to forget this lesson when it comes to AI agents, especially those using the Model Context Protocol (MCP) to interact with APIs.

Too often we build monolithic tools that throw everything at the AI at once: all the endpoints, all the request schemas, all the business logic. The result? Confused models, malformed API calls, and brittle workflows. We expect the LLM to do it all without guiding it through the structure of the system.

It doesn’t have to be this way.

The Challenge: Guiding AI Through Complexity

Giving an LLM a single monolithic MCP tool is like dropping a new hire into your company Slack and telling them, “Everything you need is in here, just ask the right questions.” Sure, it might work. But more likely, they’ll:

- Ask the wrong people.

- Miss critical docs.

- Misunderstand how things are wired together.

- And ultimately waste time or make mistakes.

AI agents face the same problem when we hand them overloaded tools. They need scaffolding, not just access.

Introducing the "Layered Tool Pattern"

Rather than building single, complex tools that handle everything from discovery to execution, the Layered Tool Pattern breaks MCP tools into distinct functional layers that work together. Like ogres (and onions), these layers create a more structured approach that guides the LLM through progressive steps.

An Example of Layering

Discovery Layer: Service discovery for the LLM to understand what's available Planning Layer: Tells the LLM how to formulate correct calls and workflows Execution Layer: Tools that actually perform the requested actions

Let's see how this works in practice.

Real-World Example: Square MCP Server

To demonstrate the Layered Tool Pattern in action, let's examine the Square MCP Server, which provides a real-world implementation of this approach. Square has tons of APIs, so simply surfacing those all to an LLM would quickly overwhelm it or lead to it calling the wrong ones.

How the Square MCP Server Uses Layers

The Square MCP Server puts the Layered Tool Pattern to work with a simple, three-layer flow to easily make use of over 30+ APIs and 200+ endpoints, with only 3 MCP tools!

Layer 1: Discovery - get_service_info

typescript1server.tool( 2 "get_service_info", 3 "Get information about a Square API service. Call me before trying to get type info", 4 { 5 service: z.string().describe("The Square API service category (e.g., 'catalog', 'payments')") 6 }, 7 async (params) => { 8 //...

This first layer allows the AI to discover what operations are available within a specific service. It doesn't execute anything; it simply provides metadata about available capabilities, helping the AI understand what's possible.

Layer 2: Planning - get_type_info

typescript1server.tool( 2 "get_type_info", 3 "Get type information for a Square API method. You must call this before calling the make_api_request tool.", 4 { 5 service: z.string().describe("The Square API service category (e.g., 'catalog', 'payments')"), 6 method: z.string().describe("The API method to call (e.g., 'list', 'create')") 7 }, 8 async (params) => {

The second layer helps the AI understand the specific requirements for making a call. It provides detailed parameter information, validation rules, and expected response formats. This layer guides the AI in formulating a correct request without executing anything yet.

Layer 3: Execution - make_api_request

typescript1server.tool( 2 "make_api_request", 3 `Unified tool for all Square API operations. Be sure to get types before calling. Available services: 4 ${Object.keys(serviceMethodsMap).map(name => name.toLowerCase()).join(", ")}.`, 5 { 6 service: z.string().describe("The Square API service category (e.g., 'catalog', 'payments')"), 7 method: z.string().describe("The API method to call (e.g., 'list', 'create')"), 8 request: z.object({}).passthrough().optional().describe("The request object for the API call.") 9 },

The third layer actually performs the operation, sending the request to Square's API and returning the results. This layer only executes after the AI has used the previous layers to understand what's available and how to format the request correctly.

Check it out in action here: Square @ Cloudflare MCP Demo Day

Layers Aren’t Just for Ogres

LLMs are powerful, but they still need good infrastructure tools that guide, not confuse. The Layered Tool Pattern gives you a principled way to structure MCP tools so your AI agents don’t have to guess their way through your APIs.

Layering doesn’t have to follow a single formula. “Discovery, planning, execution” is just one example. You might choose different layers depending on your domain, your APIs, or the kinds of reasoning you expect the model to do. What matters is the structure: guiding the model step-by-step rather than throwing it into a monolithic blob of logic and hoping for the best.

Layering improves reliability, debuggability, and maintainability, not just for humans, but for the agents working alongside us.

So if you're building AI-integrated systems, remember:

"Peeling back layers helps ogres make sense of themselves... and it helps AI make sense of your stack."

Acknowledgments

The core idea behind the Layered Tool Pattern was first proposed by Max Novich, and brought to life in production by Mike Konopelski, who implemented the pattern in the Square MCP Server. Huge thanks to both of them for showing what thoughtful AI tool design can look like in practice.